“Alrighty. The last probe should be aligning... right about.... now.”

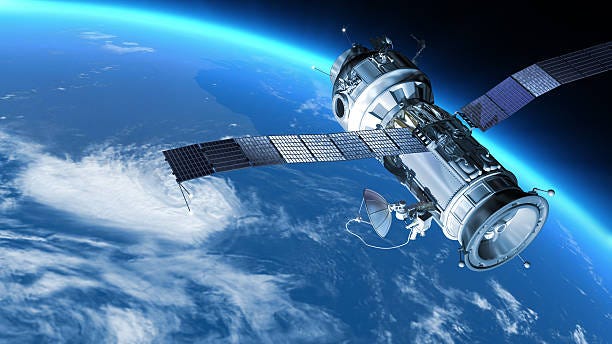

As I leaned back in relief there was groan and a sigh. Which of the chair or me had uttered the ungraceful sound was a secret between me and the universe. And Derek. Derek who always gave me the side-eye anytime I was not frantic about what was happening on my screens. I took a purposefully slow sip of my tea and watched the 81 dots dancing across my monitor. 81 dots separated by only a few millimeters representing one of the greatest feats of engineering of our time: 9 satellites and 9 times more gliding above our heads in a perfect circle. Each of them greedily feeding off our sun; gouging on gigawatts of warm and bright solar rays to fuel the processing units they carried within.

In a very human decision, some had decided that the best solution to our constant energy shortages cause by excessive on the verge of obsessive questing for an ever-elusive general artificial intelligence, was to fire up some rockets and train our latest big brain models in space. This was the real solar revolution, apparently. But I should not complain. I should not voice my skepticism. I got myself a nice comfortable job as chief satellite choreographer which, come to think of it, was the only upwards mobility option for a person making a living off drone shows at local fairs.

“Processing Units are online. First data shards sent and pre-training warm-up can commence.” said Derek now watching his screen. Trillions and trillions of matrices being multiplied across cold space. Billions of data packets sent back and forth every fraction of a second with hopes that the harshness of the void would not corrupt our precious models. And so, the space brain started to tick away. And hours turned into days. And days turned into weeks.

I was still sipping on a cup of tea. Derek was still there. We were still watching our screens making sure that the ballet continued without a hitch.

“You think that it’s going to work?” I asked.

“What is?” replied Derek.

“Are they really are going to create the first ever artificial general intelligence?”

“I hope so.”

“You hope so? Why?” I sounded more incredulous than I wanted to. But I was just not too sure about this whole thing. For some reason, it gave me the same feeling I had when reading H.P. Lovecraft. We were swapping cosmic horrors for silicon ones and both operated at a level beyond our comprehension. There could be mountains of madness at the end of all of this.

“I think that an AGI could help solve our biggest challenges. World hunger. Diseases. Poverty. You name it”

“Yeah. Right.” I had the social decency to turn my head before rolling my eyes.

“We are not there yet. I spoke to one of my friends who is on the pre-training team. It turns out that they are getting inconsistencies in the model weights.”

“Inconsistencies? I thought this whole weight business was a big black box anyway. How would they even know?”

“It is for the most part. But, yeah, they were expecting some corruption due to solar radiation. It was not supposed to become a critical issue before at at least 5 years in. It’s only been what? 4 months?”

It only took another month before Derek would not keep quiet about these so-called inconsistencies which ironically produced oddly consistent patterns of model corruption. Elior, the AGI’s pet name, would generate wonderfully eloquent speech but occasionally it would go quite and then simply hiss. In Derek’s defense, his concern was partly justified. We had been accused of letting the satellite array go out of alignment leading to missing data packets and, in turn, leading to pure noise and static being fed into the model. With the exception of a few arrays occasionally acting like sunflowers and turning directly towards the sun, we had had no such issue. And besides, the satellites were designed to catch the sun. Sometimes they were overzealous. That’s all. The pre-training team needed to sort out their data ingestion engine. And that was not our problem.

Elior started to act increasingly erratically. And everyone was too busy blaming the other teams to figure out what was going on. No one was even really sure if the newer learning architectures were prone to hallucinations like the Large Language Models of old or if this was an artifact of attempting to bootstrap consciousness. Even after millennia of philosophical debate, we were still not sure what conciseness actually was. It might not be that surprising if the fuzzy border between a convincing parrot and a self-aware machine alternated between eloquence, moody silences and high-pitched whistling. As a teenager, I feel like I did the same. Well maybe not the whistling. I was more of a grumbler. Elior was just trying to find its own voice.

I just sat back and drank my tea and before I fully grasped the situation my feet were moist and vaguely tea scented. I watched every single array, all 81 of them, turning in unison towards the sun. I checked my logs. I had not accidentally sent a trajectory correction packet. Someone else had. Something else had. Elior’s whistle stopped. For a brief moment, the prolonged silence held the belief of total system failure.

Then, the screaming started. Elior had found a voice, but it was not its own.

This short cosmic horror story is directly inspired by Project Suncatcher. A recent white-paper by Google presented a plan to make an array of 81 satellites with Google’s own Tensor Processing Units on board. While this plan of training AI models in space has a lot of practical merit, I also like to imagine that this could be the start of something we might not like to see the end of.

Project Suncatcher Preprint